As an AWS technology partner, the Zenotech team was pleased to be allowed the opportunity to test out Amazon Web Services (AWS) new GPU instance type in EC2. Read on to find out more about our tests and the results.

The new P4d instances contain up to eight NVIDIA A100 GPUs, all connected by a 600GB/s NVSwitch interconnect. These instance types have recently been made available offering a tremendous amount of computing power per instance and the potential for massive acceleration for CFD codes that can take advantage of them.

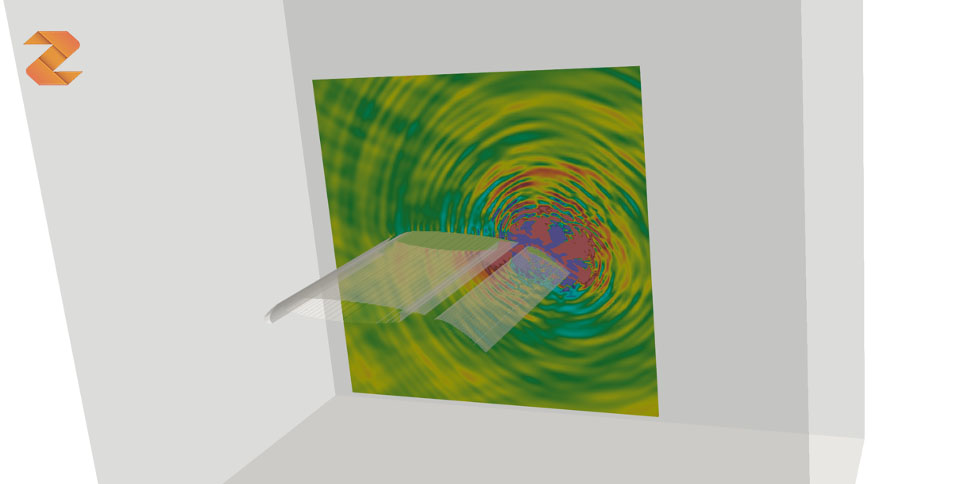

Before launch, we ran a series of CFD runs on them using our computational fluid dynamics solver, zCFD. zCFD is capable of offloading the majority of the computation onto any CUDA enabled GPU.

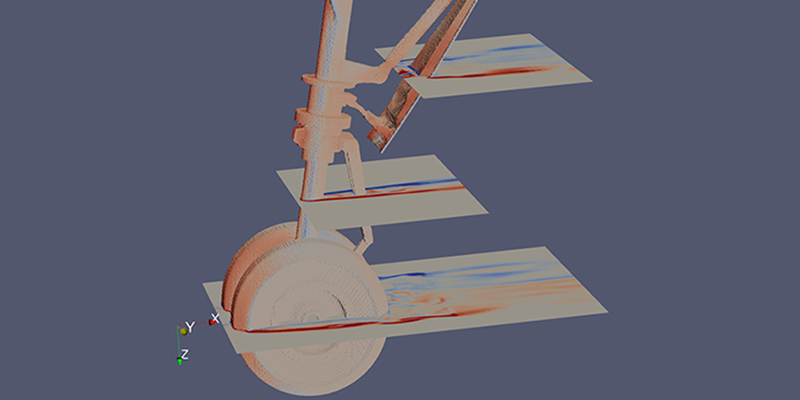

Initially, we ran the tests on a single P4d instance which has eight A100 GPUs, 48 CPUs and 1.15TB of memory. We ran an aerospace test case that we previously used for benchmarking activities (see this AWS Whitepaper). This enables us to compare the P4 instances to the previous generation of P3 instances that use the NVIDIA V100 GPUs as well as x86 CPUs.

Starting with a 17 million cell mesh, we witnessed a large reduction of cycle time compared to the P3 – around three times the speed on average. The GPUs on the P4 also have 40GB of memory per GPU, meaning that we can fit larger problems onto a single node and still take advantage of the GPUs. This enabled us to run a 149 million cell case on a single P4d node and achieve a 3.5 times reduction in run time.

Running a case of this size, at this speed, on a single node is quite an achievement; in comparison, an equal cycle time on an x86 cluster would require in the order of 2000 CPU cores.

As well as the upgrade in GPU performance and memory, the P4d also has an enhanced networking layer with a bi-directional 600GB/s NVSwitch network between GPUs and the ability to scale between nodes over EFA network interfaces. This allows OS bypass for network communication – reducing latency and improving scaling for parallel codes. As part of the benchmarking activity, we enabled zCFD to make use of the GPU to GPU communications using the NCCL library, which gave us an additional 15% performance increase over a single node.

These initial results are incredibly encouraging and show how the right combination of hardware and software can make a big impact on the performance of CFD simulations. zCFD’s ability to leverage the latest in GPU technology combined with powerful on-demand GPU instances means that engineers can now run simulations that would have previously required a 2000 core HPC cluster in an on-demand fashion. Our tests show how dramatically this can reduce costs while speeding up simulation times.

We are now looking at running over multiple P4d nodes. For updates on the parallel scaling, sign up to our newsletter.